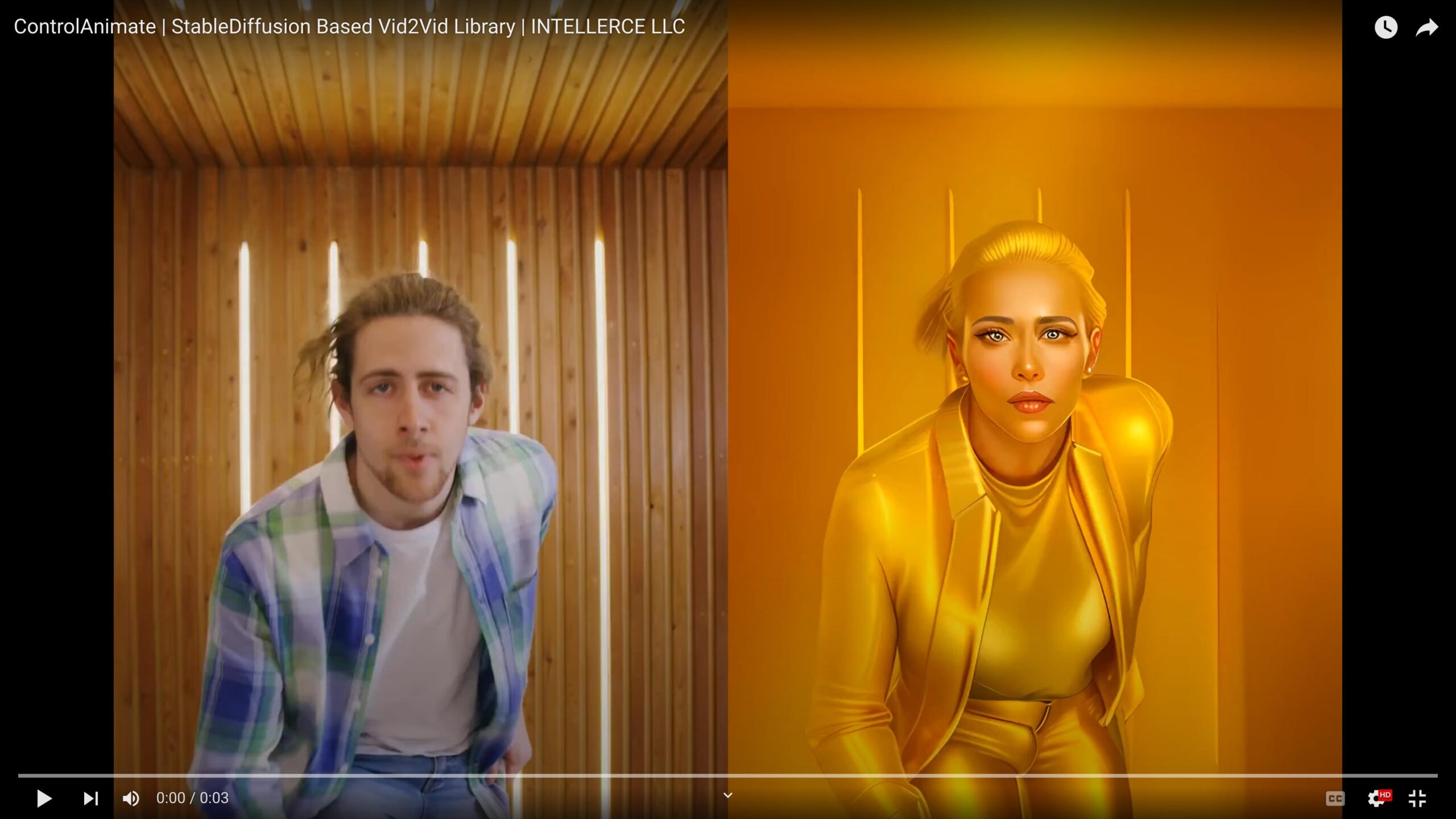

One of the next steps in Gen AI is producing video contents which is not a trivial task. Towards this end, we are releasing ControlAnimate (open-source library) to combine a few methods and models (AnimateDiff, Multi-ControlNet, …) as well as a few tricks (overlapping latents, etc.) to produce more consistent videos (Vid2Vid) using current state of the art methods.

Repository: https://github.com/intellerce/controlanimate

Leave a Reply